How to set up A/B/n testing

Aug 21, 2023

A/B/n testing is like an A/B test where you compare multiple (n) variants instead of just two. It can be especially useful for small but impactful changes where many options are available like copy, styles, or pages.

This tutorial will show you how to create and implement an A/B/n test in PostHog.

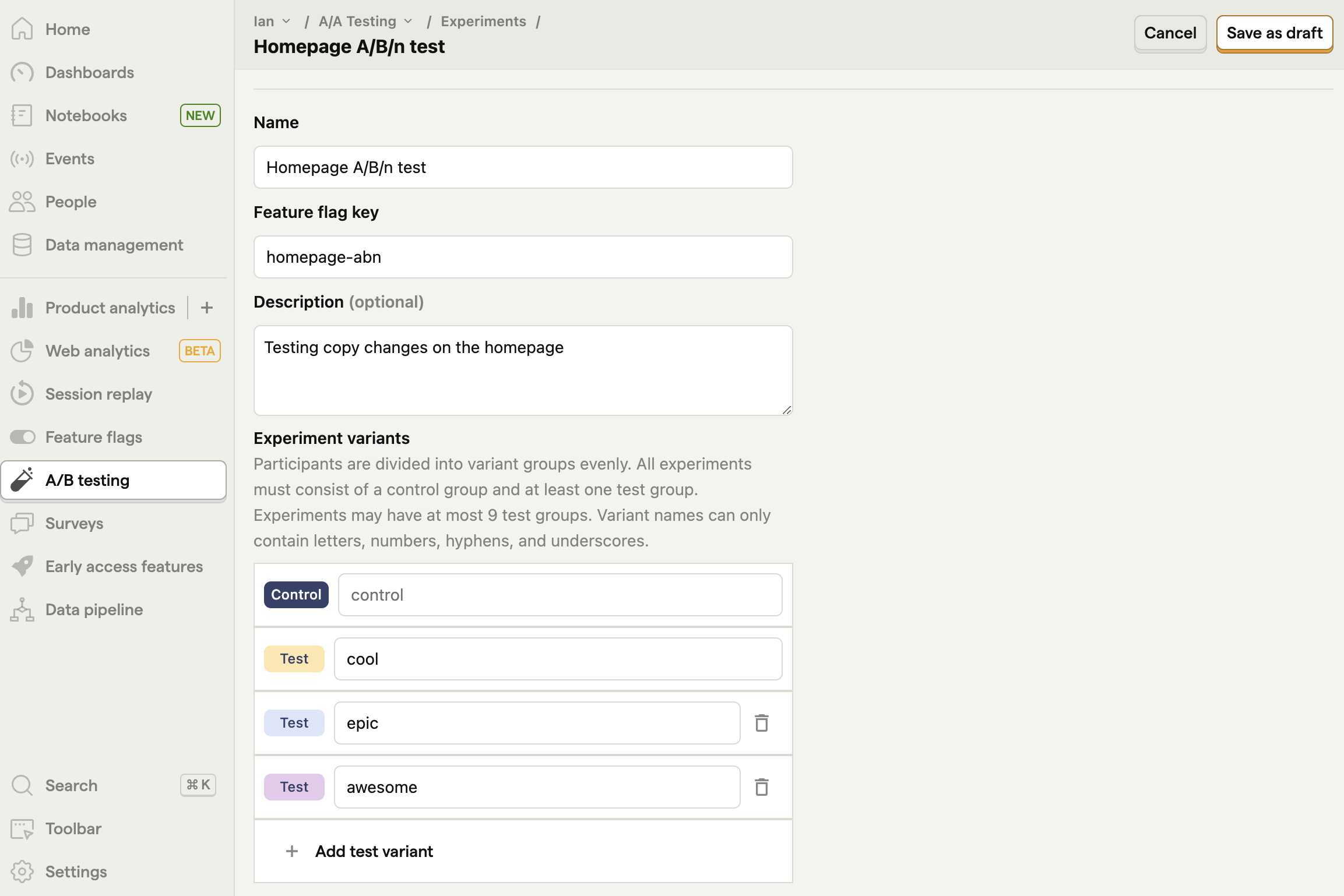

Creating an A/B/n test

To create an A/B/n, go experiments tab in PostHog:

- Click "New experiment."

- Add a name and description.

- Add feature flag key. In your code, you use this feature flag key to check which experiment variant the user has been assigned to.

- Under "Experiment variants," click "Add test variant" twice to add two new variants. Rename the three variants if you want.

Fill out the rest of your details like participants, goal type, secondary metrics, and minimum acceptable improvement. Once done, click "Save as draft".

Next, we'll implement the A/B/n test in our app.

Implementing our A/B/n test

Implementing our test requires checking the feature flag to see which experiment variant our user has been assigned to. We then add code to handle each variant.

We will use a Next.js app where we already set up the [PostHogProvider](/docs/libraries/next-js#app-router) for this.

Using a different library or framework? See our experiment docs on how to add your code using our different SDKs.

In our a page.js file, we:

- Import

useFeatureFlagVariantKeyfromposthog-js/react, anduseStateanduseEffectfromreact - Set up a state for

mainCopyusinguseState. - Set the

mainCopystate in auseEffectusing the variant key value we get from PostHog. - Show the

mainCopystate to users in our component.

Together, this looks like this:

When we reload our page, we get a title based on the flag key variant.

Testing the other variants

We can check the other variants by going to the feature flag page, searching for the key related to our experiment, and clicking on it to edit it. Scroll down to release conditions and set the optional override to any of the flag values.

If you want the optional override to apply to only you, you can create another condition set and set the condition like utm_source = awesome then use use the link http://localhost:3000/?utm_source=awesome or identify your user with an email and use email = your_email@domain.com . Finally, press save and when you go back to your app, you see the overridden flag value.

Note: Make sure to remove the optional override before rolling out your A/B/n test to real users.

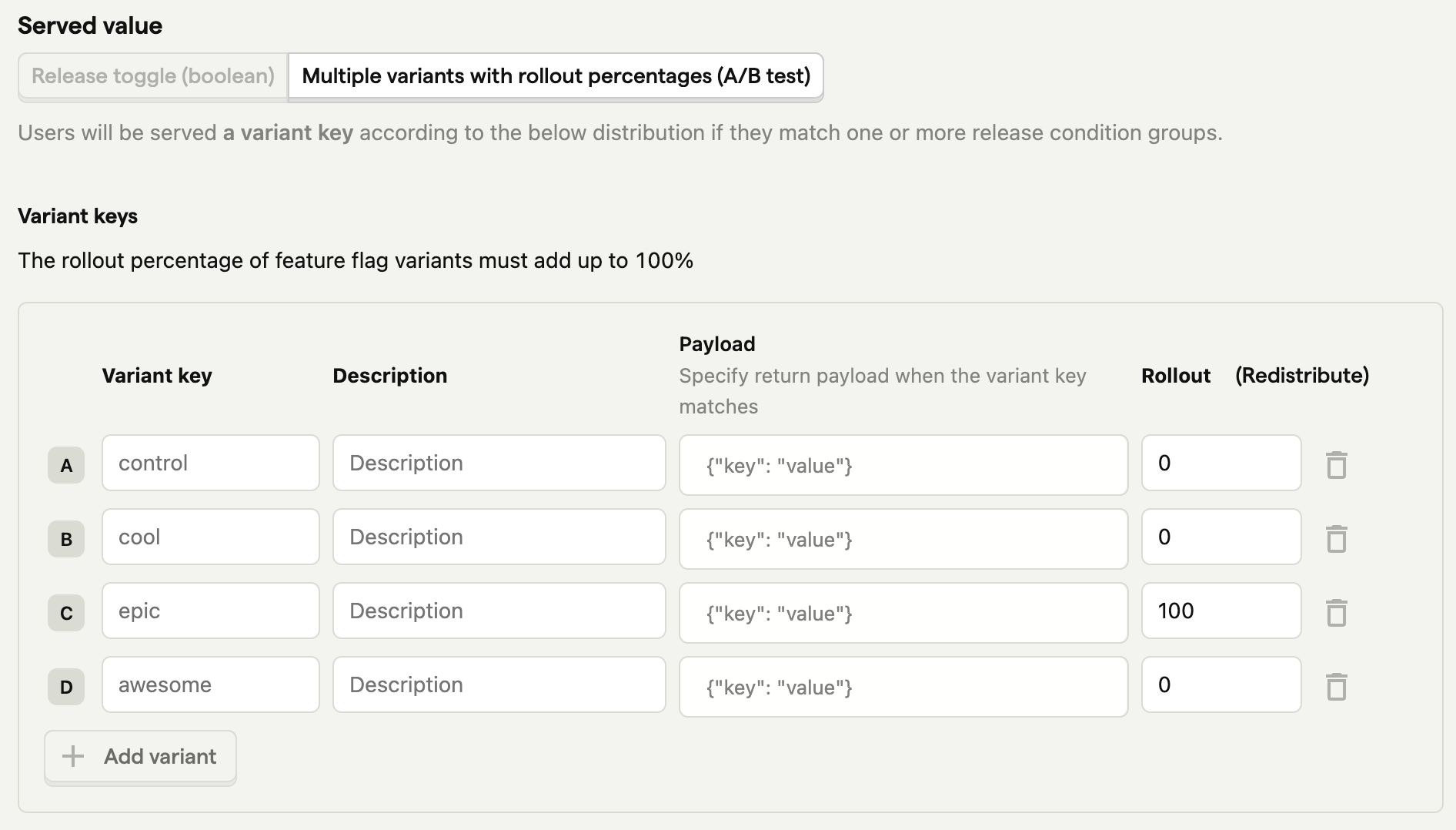

Rolling out the winning variant

Once your experiment has concluded, you can stop it and roll out the winning variant.

To do this, click the "stop" button on your experiment details page, go to your feature flag page, search for the key related to your experiment, and click to edit it. Under variant keys, edit the rollout value to 100 for the winning variant and 0 for the losing ones, and press save. When you’re ready, you can remove the experiment-related code from your app too.

Further reading

- How to do holdout testing

- How to do A/A testing

- How to use Next.js middleware to bootstrap feature flags